By T.J. Mertzimekis

1. Introduction to Natural Language Processing and Machine Learning

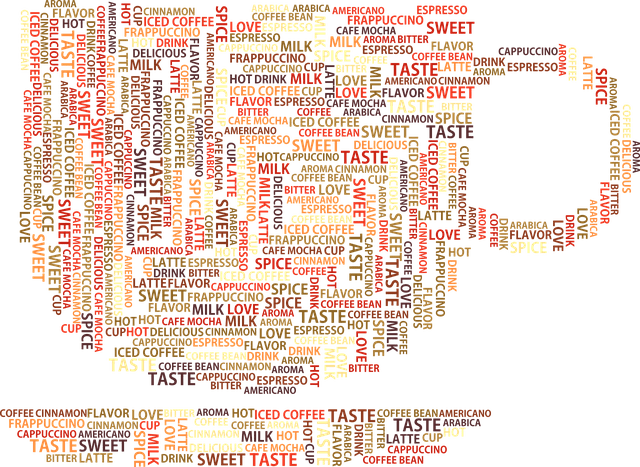

Natural Language Processing (NLP) is a fascinating field at the intersection of linguistics, computer science, and artificial intelligence. It focuses on the interaction between computers and human language, aiming to enable machines to understand, interpret, and generate human language in a way that is both meaningful and useful. Machine Learning (ML), a subset of artificial intelligence, has revolutionized NLP in recent years. ML algorithms allow computers to learn from and make predictions or decisions based on data, without being explicitly programmed to perform specific tasks. The synergy between NLP and ML has led to remarkable advancements in how machines process and understand human language. In this blog post, we'll explore the fundamental concepts of NLP, dive into various machine learning approaches used in NLP, and examine some of the most exciting applications and challenges in this rapidly evolving field.

2. Key Concepts in NLP

Before we delve into the machine learning aspects of NLP, it's crucial to understand some fundamental concepts:

2.1. Tokenization: The process of breaking down text into smaller units, typically words or subwords. Example: "I love NLP!" → ["I", "love", "NLP", "!"]

2.2. Part-of-Speech (POS) Tagging: Labeling words with their grammatical categories. Example: "The cat sat on the mat" → The (determiner), cat (noun), sat (verb), on (preposition), the (determiner), mat (noun)

2.3. Named Entity Recognition (NER): Identifying and classifying named entities in text into predefined categories. Example: "Apple Inc. was founded by Steve Jobs in Cupertino, California" → Apple Inc. (Organization), Steve Jobs (Person), Cupertino (Location), California (Location)

2.4. Sentiment Analysis: Determining the emotional tone behind a piece of text. Example: Classifying a product review as positive, negative, or neutral.

2.5. Language Modeling: Predicting the probability of a sequence of words. Application: Crucial for machine translation and speech recognition.

2.6. Word Embeddings: Dense vector representations of words that capture semantic relationships. Popular models: Word2Vec, GloVe, FastText

Understanding these concepts is essential as we explore how machine learning has transformed NLP.

Figure 1. Word cloud illustrating key concepts and applications in Natural Language Processing and Machine Learning.

3. Machine Learning Approaches in NLP

Machine learning approaches in NLP can be broadly categorized into two main categories: traditional ML methods and deep learning methods.

3.1. Traditional ML Methods: Traditional machine learning methods in NLP typically involve feature engineering and statistical models. Some popular approaches include:

3.1a. Naive Baye:

A probabilistic classifier based on Bayes' theorem, often used for text classification tasks like spam detection or sentiment analysis. Example: In spam detection, we might calculate the probability of a message being spam given the presence of certain words: P(spam | "win", "free", "money") = P("win", "free", "money" | spam) * P(spam) / P("win", "free", "money")

3.1b. Support Vector Machines (SVM):

A powerful classifier that finds the hyperplane that best separates different classes in a high-dimensional space. Application: SVMs can be used for text classification tasks, such as categorizing news articles into topics.

3.1c. Decision Trees and Random Forests: Tree-based models that make decisions based on feature hierarchies. Example: A decision tree for sentiment analysis might first split on the presence of words like "great" or "terrible", then consider other features like sentence length or punctuation.

3.1d. Hidden Markov Models (HMM): Probabilistic models that assume the system being modeled is a Markov process with unobserved (hidden) states. Application: HMMs are often used in POS tagging, where the hidden states are the POS tags, and the observed states are the words.

3.2. Deep Learning Methods: Deep learning, a subset of machine learning based on artificial neural networks, has led to significant breakthroughs in NLP. Some key deep learning approaches include:

3.2a. Recurrent Neural Networks (RNN): Neural networks designed to work with sequence data, making them well-suited for many NLP tasks. Variants: Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU) networks, which address the vanishing gradient problem in traditional RNNs. Application: Language modeling, machine translation, sentiment analysis.

3.2b. Convolutional Neural Networks (CNN): While primarily used in computer vision, CNNs have also shown promise in certain NLP tasks. Application: Text classification, sentiment aalysis, and as feature extractors in more complex NLP models.

3.2c. Transformer Models: A novel architecture that relies entirely on attention mechanisms, dispensing with recurrence and convolutions entirely. Key Innovation: Self-attention mechanism, which allows the model to weigh the importance of different words in a sentence when processing each word. Famous Examples: BERT (Bidirectional Encoder Representations from Transformers), GPT (Generative Pre-trained Transformer), T5 (Text-to-Text Transfer Transformer). Application: These models have achieved state-of-the-art results on a wide range of NLP tasks, including language understanding, translation, summarization, and question answering.

4. Popular NLP Tasks and Applications

Machine learning has enabled significant advancements in various NLP tasks and applications. Here are some of the most popular ones:

4.1. Machine Translation: Automatically translating text or speech from one language to another. Example: Google Translate, which uses neural machine translation models.

4.2. Sentiment Analysis: Determining the emotional tone behind a piece of text. Application: Analyzing customer reviews, social media monitoring.

4.3. Text Summarization: Generating concise and coherent summaries of longer texts. Types: Extractive (selecting important sentences) and abstractive (generating new text).

4.4. Question Answering: Building systems that can automatically answer questions posed in natural language. Example: IBM Watson, which famously competed on the quiz show Jeopardy!

4.5. Chatbots and Conversational AI: Creating systems that can engage in human-like dialogue. Application: Customer service, virtual assistants like Siri or Alexa.

4.6. Text Generation: Producing human-like text based on input prompts or conditions. Example: GPT-3, which can generate coherent articles, stories, and even code.

4.7. Named Entity Recognition: Identifying and classifying named entities (e.g., person names, organizations, locations) in text. Application: Information extraction, content classification.

4.8. Speech Recognition: Converting spoken language into text. Example: Voice-to-text applications, voice commands in smartphones.

These applications demonstrate the wide-ranging impact of machine learning in NLP, from enhancing communication across languages to enabling more natural interactions with AI systems.

5. Challenges in NLP

Despite the remarkable progress in NLP, several challenges remain:

5.1. Ambiguity: Natural language is often ambiguous, with words and phrases having multiple meanings depending on context. Example: The word "bank" could refer to a financial institution or the edge of a river.

5.2. Contextual Understanding: Machines often struggle to understand context, sarcasm, and implicit information that humans easily grasp.

5.3. Multilinguality: Developing models that perform well across multiple languages, especially low-resource languages, remains challenging.

5.4. Bias: ML models can inadvertently learn and amplify biases present in training data, leading to unfair or discriminatory outcomes.

5.5. Common Sense Reasoning: While ML models excel at pattern recognition, they often lack common sense understanding that humans take for granted.

5.6. Computational Resources: State-of-the-art NLP models often require significant computational resources for training and deployment.

5.7. Data Privacy: NLP applications often deal with sensitive personal data, raising concerns about privacy and data protection.

Addressing these challenges is crucial for the continued advancement of NLP and its ethical application in real-world scenarios.

6. Future Trends

The field of NLP is rapidly evolving, with several exciting trends on the horizon:

6.1. Multimodal Learning: Combining language processing with other forms of data, such as images or audio, to enable more comprehensive understanding.

6.2. Few-Shot and Zero-Shot Learning: Developing models that can perform well on new tasks with minimal or no task-specific training data.

6.3. Explainable AI: Creating NLP models that can not only make predictions but also explain their reasoning in a way humans can understand.

6.4. Efficient NLP: Developing more computationally efficient models to reduce the environmental impact and make NLP more accessible.

6.5. Continued Scaling: Exploring the capabilities of even larger language models and their potential applications.

6.6. Domain-Specific Models: Fine-tuning large language models for specific domains like healthcare, finance, or legal to improve performance on specialized tasks.

6.7. Ethical AI: Increasing focus on developing fair, unbiased, and transparent NLP systems.

These trends suggest an exciting future for NLP, with potential applications that could revolutionize how we interact with technology and process information.

7. Closing Remarks

Machine learning has undeniably transformed the field of Natural Language Processing, enabling remarkable advancements in how computers understand and generate human language. From traditional statistical methods to cutting-edge deep learning approaches, ML has pushed the boundaries of what's possible in NLP.

As we've explored in this blog post, the applications of ML in NLP are vast and varied, ranging from machine translation and sentiment analysis to question answering systems and text generation. These technologies are not just academic curiosities but are increasingly integral to our daily lives, powering everything from virtual assistants to content recommendation systems.

However, as with any powerful technology, the application of ML in NLP comes with its own set of challenges and ethical considerations. Issues of bias, privacy, and the environmental impact of large-scale models are at the forefront of current discussions in the field.

Looking to the future, the continued evolution of NLP promises even more exciting developments. From multimodal learning to more efficient and explainable models, the field is poised for further breakthroughs that could reshape our interaction with language and technology.